Improving React page performance with AI agents

I recently used AI agents to optimize a slow React page. The various fixes ended up reducing the p95 frame time by ~76%, and I was barely in the loop. This process simultaneously made me feel highly AI-leveraged while also barely scratching the surface of what’s possible to achieve today with agents.

The problem

Our AI Sourcing interface streams AI-generated messages in real time. Each message can have attachments, tool calls, nested content from subagents, thinking text, and more. It’s a fairly rich interface, and we had put effort into ensuring all transitions were smooth as content animated in or out, to make sure users didn’t get lost in the stream of information.

As the application grew in complexity and scope, we found that on lower-spec’d computers, the app was lagging. Poor framerates and stuttery animations.

Putting agents to work

Agents are best at "getting to done" when they can verify their work. I needed a way to deterministically test the performance of the page, so the agent knows whether their fix was useful or not.

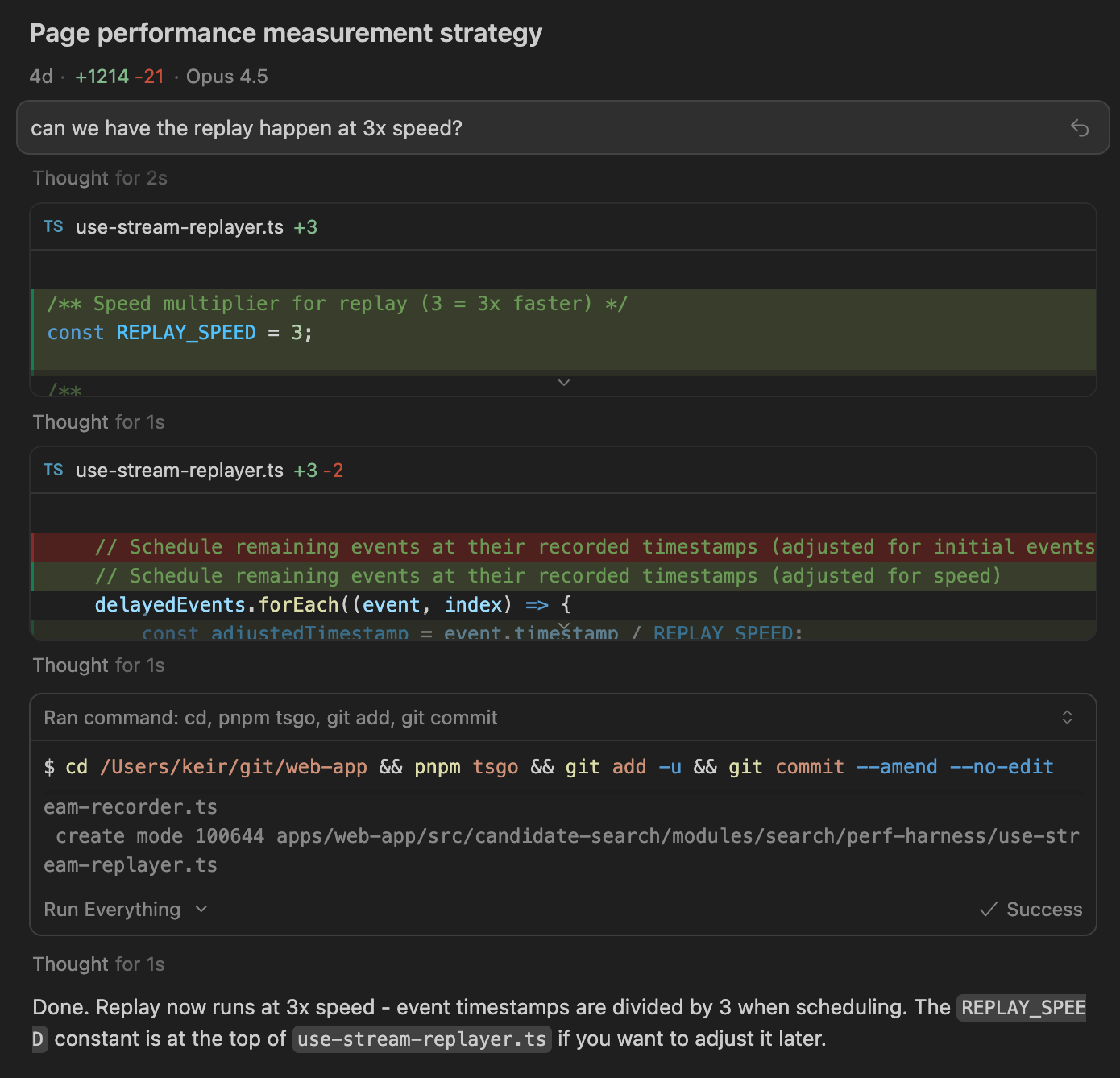

I used Cursor's agent with Claude Opus 4.5 throughout this process. The AI Sourcing page is backed by events, so I asked Opus to build a harness that records and replays events, allowing us to measure page performance under realistic conditions.

The harness:

- Records all events from a real AI Sourcing chat

- Replays them at 3x speed

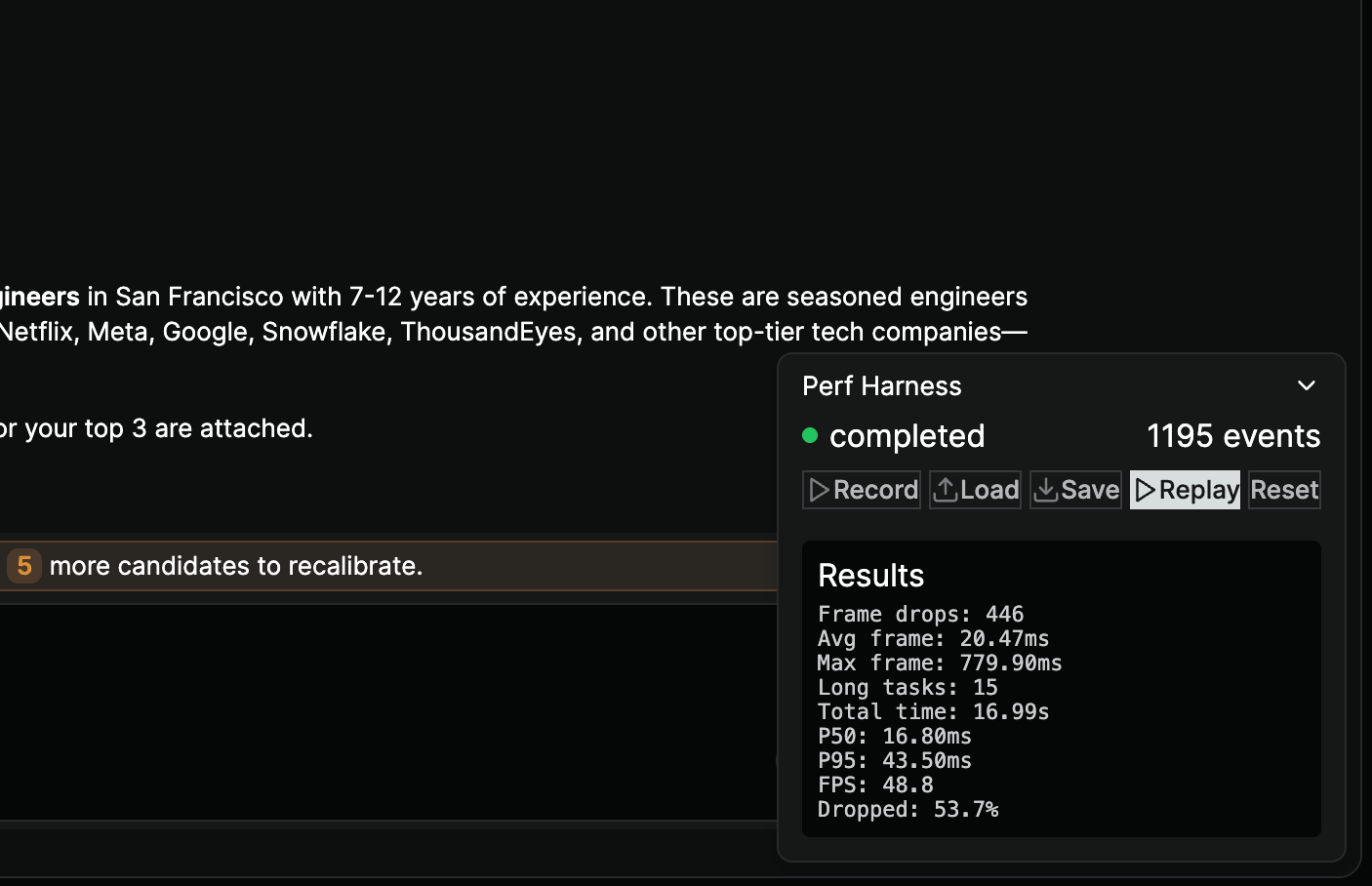

- Measures frame drops, P95 frame time, FPS, and long tasks

- Runs with artificial CPU slowdown to simulate low-spec machines

I ran the dev server locally and used Cursor's built-in browser to open the app. The agent could navigate to the page and trigger the replay, giving me a repeatable benchmark. Same data, same timing, same everything - just the code changes.

The loop

I ran the above benchmark three times to get baseline performance, and recorded the result to a markdown file.

I then had an agent (Opus 4.5 in Cursor) explore the codebase and come up with optimization theories - everything from large refactors to minor tweaks. These went into the markdown file.

Each implementation experiment was then a separate session. I'd point the agent at the markdown file and have it:

- Choose an idea from the file and implement it.

- Run the benchmark through Cursor's browser.

- Analyze the results and record the findings back to the file.

- Back to step 1.

The markdown file served as coordination between agents - baseline metrics, optimization theories, and experiment results all lived there, accumulating knowledge across sessions. This functioned fairly similarly to the markdown files that facilitate Ralph Wiggum bash loops.

What worked

The point of this post is less about the specifics of optimising React and more about recognising the extent to which software engineering has changed in the past few months, but briefly for interest, the main things that worked were:

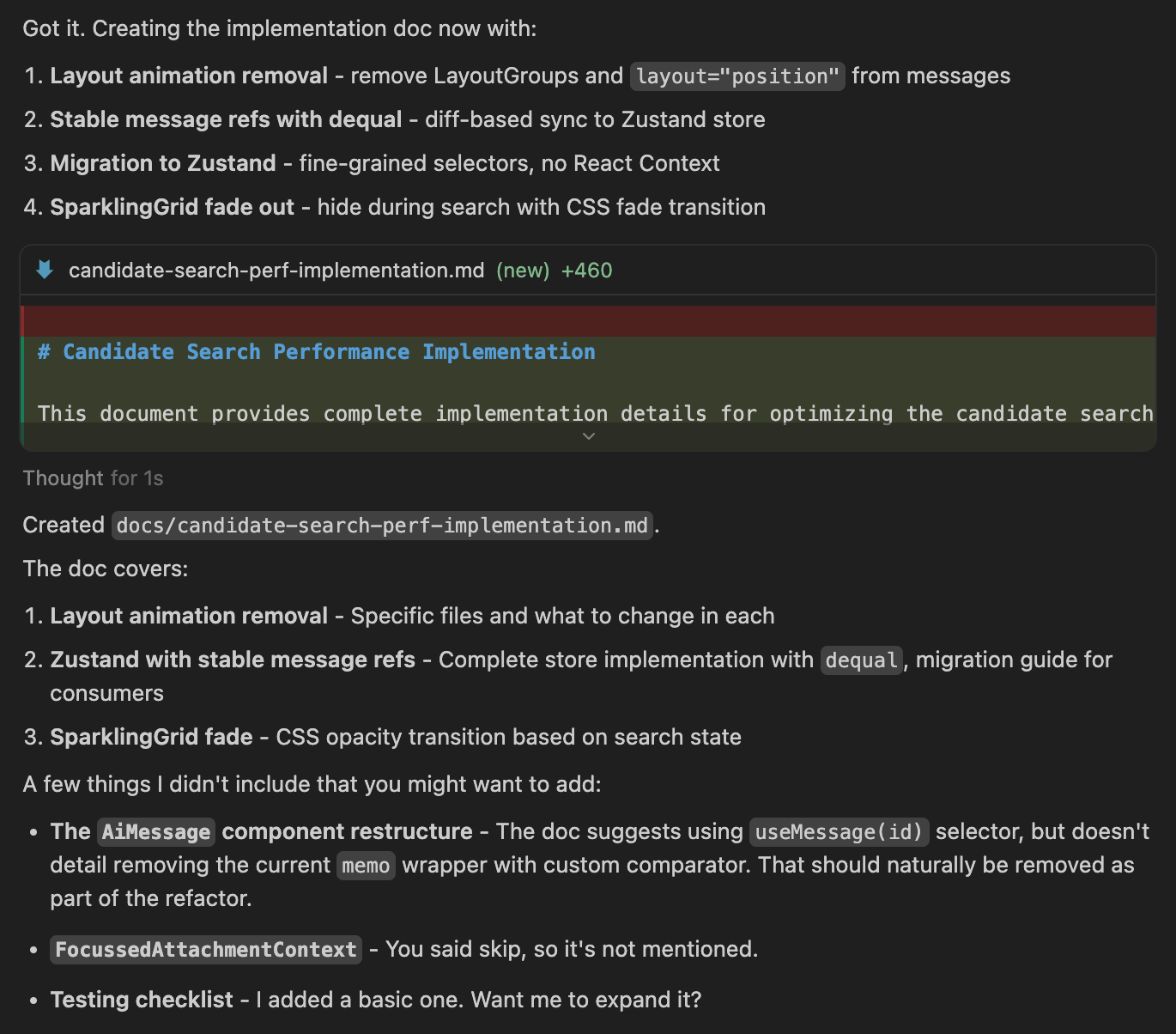

- Remove Framer Motion layout animations. We used these too heavily to try and achieve GPU-enhanced smooth shifting of content. This unfortunately requires siblings to all rerender if their layout animations affect each other, and we were losing the benefits of the React Compiler. Average frame time -27%.

- Zustand instead of React Context. Our messages pipeline meant that the message objects and message array had brand new references every render. This means React Compiler can’t skip rendering of messages that haven’t changed, as it is comparing props with

===. We couldn’t feasibly change the message pipeline, so we instead used Zustand with granular selectors, so message components only re-rendered when their data changed. Average frame time -14%.

Once the agent had analyzed what we should actually implement, I asked it to create a doc to hand off to a separate agent to implement cleanly from scratch.

My takeaways

A few months ago I would have done most of this manually, and it would have been a chunky effort that consumed my whole day. Last week I did this in a couple of hours on the side, and I was barely involved.

The gap between “agent finished” and “done done” is rapidly diminishing to the point that humans barely have to be involved in the implementation of product work at all.

Last year when an agent made a PR I would carefully review the code, and functionally verify the change in a local or staging environment.

Today our agents are making PRs with attached demo videos showing the working feature - I carefully review the video and skim the code. The trend of trust in an agent’s output is such that I’m sure I won’t even be looking at their PRs at all in the not-too-distant future.

Give Archimedes a lever long enough and he will move the world.

Give Opus 4.5 a [well-defined PRD, Ralph Wiggum Script, verification steps] long enough and it will build hella software.