Syncing a Transcript with Audio in React

At Metaview, we help companies run amazing interviews. We achieve this in a number of ways including training interviewers with automated interview shadowing, and coaching them with personalized, contextual feedback.

Importantly, we provide a transcript of the interview so that interviewers effectively have perfect recall of what happened. This frees them from note taking during the interview and means they don’t have to rely on memory when trying to recall details.

When reviewing such transcripts in our web app, the audio from the interview can be played back. In this post we’ll explore how to visually sync the transcript with the audio so that the interviewer can follow along without effort.

Here’s an example:

Doing this in React comes with some challenges. We need a way to play audio while programatically knowing the changing playback position. We then need to animate a visual marker of the active word. Importantly, we want this page to perform well across a range of devices, including achieving high frame rates on low-powered devices.

Let’s dive in!

Implementation

First, let’s get our audio playback up and running. To keep things simple for now, we’ll use the <audio> element which comes with a UI.

To respond to changes in the timestamp as the audio plays, we can listen to the timeupdate event. It is fired “when the time indicated by the currentTime attribute has been updated”. In order to subscribe to this event, we attach a ref to the audio element so we can access its addEventListener function.

const Player: React.FC = () => {

const playerRef = useRef<HTMLAudioElement>(null);

useEffect(() => {

const onTimeUpdate = () => {

console.log(playerRef.current.currentTime);

};

playerRef.current.addEventListener("timeupdate", onTimeUpdate);

return () => playerRef.current

.removeEventListener("timeupdate", onTimeUpdate);

}, []);

// controls={true} displays the <audio> UI

return <audio controls src={audioSrc} ref={playerRef} />;

};We’re using useEffect to subscribe to the player’s timeupdate event, and logging the currentTime to the console for now. The function returned from useEffect is the cleanup method and will remove the event listener when the component unmounts.

Performance

Here’s where things start to get trickier. You might be tempted to:

- Store the changing

currentTimevalue in React state - Pass this as a prop to a child component that highlights the currently-spoken word in the UI

- Call it a day

This will probably work fine on your powerful development machine. If only all of our transcript users were so lucky. We want this interface running at 60fps on a potato, so we’ll need to think carefully.

The timeupdate event fires very frequently (4Hz to 66HZ), and plugging it straight into React state will trigger frequent React renders. In small component trees this might be fine, but we want to be able to build complex features on top of this transcript component and still have it render at 60fps on low-powered devices.

Thankfully there is another way!

Modifying styles directly

How you style the currently-spoken word depends on the shape of your transcript data. At Metaview, each word comes with a startTime and endTime so we know when the word was spoken relative to the start of the audio.

currentTime is in ‘Seconds’ so we need to make sure that we’re comparing it with other time values in ‘Seconds’, or converting between units appropriately. Later on, I’ll show you a neat way to get Typescript to help us with this.Here’s the idea:

- In the

timeupdateevent handler, find the currently-spoken-word. This can be done by comparing the player’scurrentTimeto thestartTimeandendTimeof each word. - Using refs, find the DOM element corresponding to this word and add properties to its

styleobject to make it stand out.

Let’s modify the code from before to implement this:

const Transcript: React.FC<Props> = ({ transcript }) => {

const playerRef = useRef<HTMLAudioElement>(null);

const wordsRef = useRef<HTMLSpanElement>(null);

useEffect(() => {

const onTimeUpdate = () => {

const activeWordIndex = transcript.words.findIndex((word) => {

return word.startTime > playerRef.current.currentTime;

});

const wordElement = wordsRef.current.childNodes[activeWordIndex];

wordElement.classList.add('active-word');

};

playerRef.current.addEventListener("timeupdate", onTimeUpdate);

return () => playerRef.current.removeEventListener(

"timeupdate",

onTimeUpdate

);

}, []);

return (

<div>

<span ref={wordsRef}>

{transcript.words.map((word, i) => <span key={i}>{word}</span>)}

</span>

<audio controls src={audioSrc} ref={playerRef} />

</div>

);

};This code is slightly simplified and omits some boilerplate. active-word is a CSS class containing styles, such as a light background color, to make the active word stand out. This is how the following word highlighting was implemented:

The main thing to note is that we’re highlighting the active words outside of React’s render cycle by mutating DOM properties in a callback.

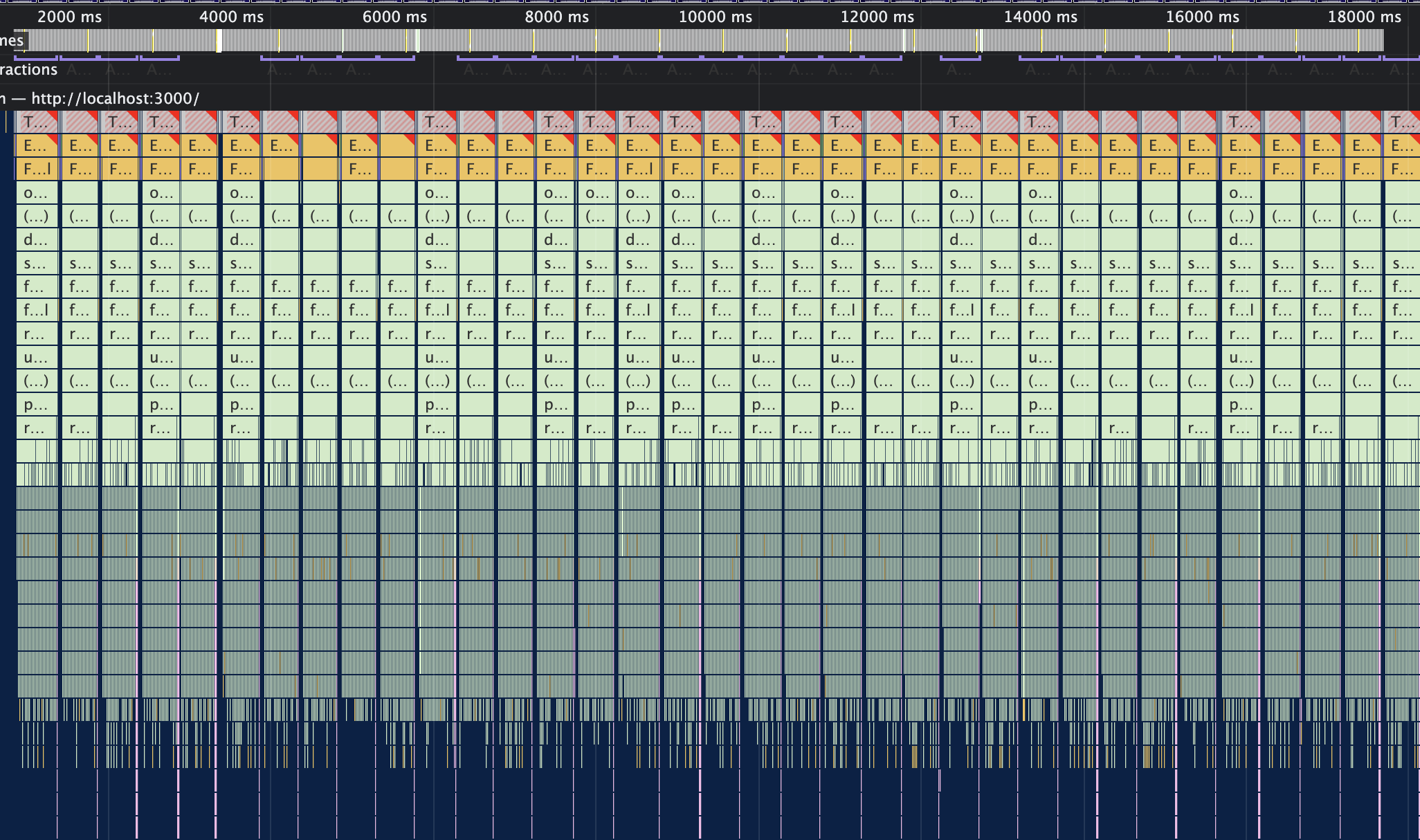

Performance comparison

Here’s a brief demonstration of the performance difference between storing currentTime in state and reactively responding to updates versus using callbacks and refs to skip React’s render.

To do this, I’m using the Chrome dev tools to throttle my CPU to a 6x slowdown (to simulate a low-powered device) and recording a trace.

Storing in state:

Here’s the flame chart from the performance tab. It’s a sea of red, users on low-powered devices would not be happy. The interface feels sluggish and unresponsive, the active token crawls along significantly behind the audio, and the CPU is straining under the weighty heft of React’s render.

Each timeupdate event is handled in >400ms (and that’s being generous). Considering that achieving 60fps gives us a 16ms budget, we are way off target here.

This is also with a small test React app with a tiny component tree. The latency would be even higher in larger real-world apps.

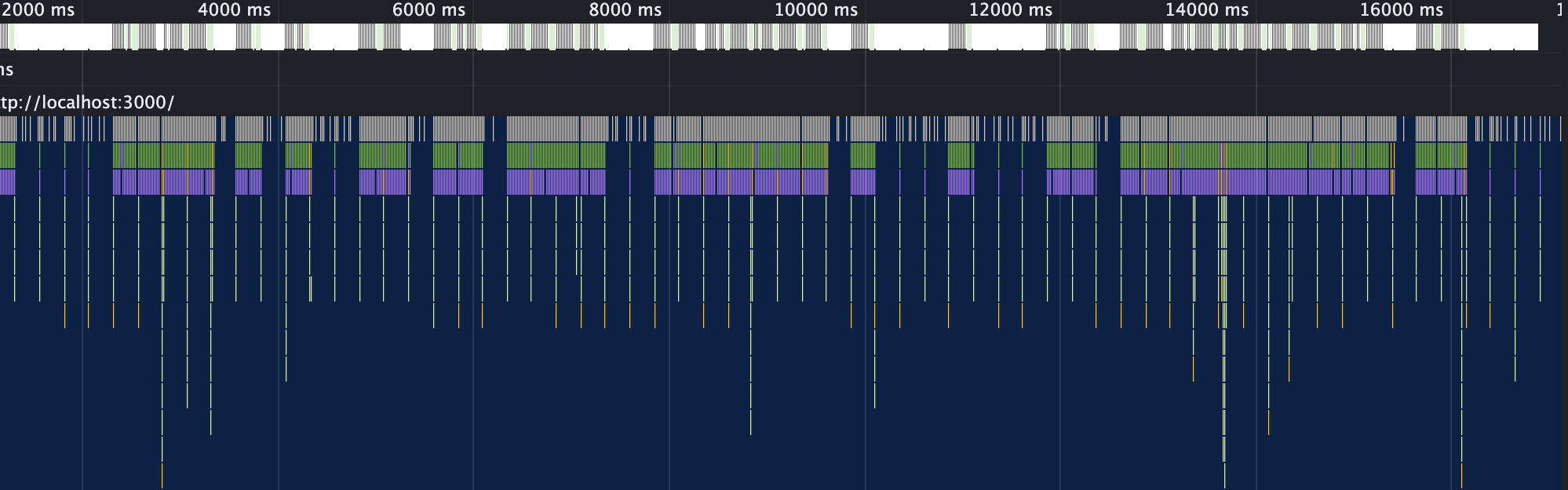

Using callbacks and refs:

Buttery smooth, not a dropped frame in sight. Users are happy, the CPU is happy, the active token marker is happily skipping along, perfectly aligned with the audio. And yes, this is still with the 6x CPU throttle. The timeupdate event is handled in <1ms and results in 60fps.

Compare that to the almost-half-second event handling attempts from before.

You get the picture.

Accessing currentTime outside timeupdate

What if we need to know the current time position outside of the timeupdate callback? A real example we faced is when trying to implement keyboard shortcut for skipping backwards and forwards.

We use MousetrapJS for binding keyboard shortcuts to callbacks. How could we implement a YouTube-like shortcut to skip backwards 10 seconds?

useEffect(() => {

Mousetrap.bind('j', () => {

// Skip backwards 10 seconds

});

return () => Mousetrap.unbind('j');

}, []);Storing the currentTime in state seems like a bad idea. Not only will our FPS suffer (see above), we’ll be binding and unbinding a new callback every frame.

() => requestSeek(currentTime - 10), we would need to put currentTime in the useEffect dependency array, to make sure that the callback has an up-to-date value.Enter Stage Left: Refs

The useRef hook creates a mutable ref object - changing the value of the .current property doesn’t trigger a React render. It’s useful for more than just accessing DOM nodes.

We can store arbitrary mutable values in refs. This seems like a good option for storing currentTime. Let’s create a ref and update it when the timeupdate event fires. We can now bind our skip 10s shortcut like so:

useEffect(() => {

Mousetrap.bind('j', () => {

requestSeek(positionSecondsRef.current - 10)

});

return () => Mousetrap.unbind('j');

}, [requestSeek, positionSecondsRef]);The values of positionSecondsRef and requestSeek don’t change (as long as we create requestSeek with useCallback), so the shortcut will be bound only once.

Aside: Type branding

We’re dealing with time values in a lot of different places, and have to be careful with inconsistent units. The audio currentTime property is in seconds, so we need to make sure we’re not passing it into functions that accept milliseconds, or comparing it with values in milliseconds.

Since we’re using Typescript, we can get some help from the type system to provide some static hints. With ‘type branding’ we can define a type Seconds so we get errors if we use it in the wrong way, while still being able to pass it around as a number.

type Brand<K, T> = K & { __brand: T }

type Seconds = Brand<number, 'Seconds'>;

const x: Seconds = 5 as Seconds;

function seekToPosition(position: Seconds) {

//

}

seekToPosition(x); // Compiles

seekToPosition(5); // TypeError: Argument of type 'number' is not

// assignable to parameter of type 'Seconds'.

seekToPosition(5 as Seconds); // CompilesI personally prefer this to embedding the units of such values in variable names so that names are concise.

Pretty playback

Here are some brief tips on how to implement a couple of different styles.

Moving highlight behind words

This style moves a rectangle smoothly from word to word by animating its position and size. As in the previous example, we find the DOM node corresponding to the active word. We then use the node’s offsetTop, offsetLeft, offsetWidth and offsetHeight properties to know where to place the highlight. Adding a transition property to the highlight’s styles makes the position and size animated.

Highlighting the current word

This is similar to the example before, but we don’t animate the position or size. Instead, the highlight element is on top of the words, and we also set its textContent property to the value of the active word DOM node’s textContent.

textContent and not innerText as it is more performant. Reading innerText triggers a reflow, which is not the case for `textContent`.textContent and not innerHTML as it is more performant. Writing to innerHTML has lower performance as the value is parsed as HTML. More importantly though, it leaves you vulnerable to XSS(!).Imagine if a candidate said in the interview “left angled bracket script right angled bracket alert left parenthesis quote got your cookie quote right parenthesis...” (etc...).

Ok maybe not, but it’s good to be safe anyway.

Closing thoughts

That’s it! This should be enough to get started, but there’s much more to building a delightful transcript page such as using a custom audio player UI, video playback, code playback, and more.